Low-Complexity Intervisibility in Height Fields

Abstract

Global illumination systems require intervisibility information between pairs of points in a scene. This visibility problem is computationally complex, and current interactive implementations for dynamic scenes are limited to crude approximations or small amounts of geometry. We present a novel algorithm to determine intervisibility from all points of dynamic height fields as visibility horizons in discrete azimuthal directions. The algorithm determines accurate visibility along each azimuthal direction in time linear in the number of output visibility horizons. This is achieved by using a novel visibility structure we call the convex hull tree. The key feature of our algorithm is its ability to incrementally update the convex hull tree such that at each receiver point only the visible parts of the height field are traversed. This results in low time complexity; compared to previous work, we achieve two orders of magnitude reduction in the number of algorithm iterations and a speedup of 2.4 to 41 on 10242 height fields, depending on geometric content.

Material

Full paper, 2.8 MB, 14 pagesComputer Graphics Forum 2012

Eurographics Conference 2013 (invited)

Bibtex reference

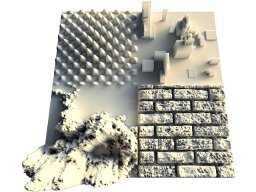

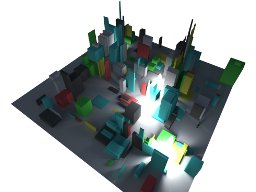

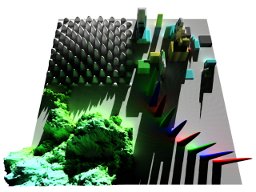

Screen captures

Eurographics 2013 presentation

Presentation slides, 11 MBIncremental convex hull tree progression (presentation video):

Author comments

When I first figured out how to calculate the intervisibility in this largely reduced complexity I got excited, only to get frightened not much later: it quickly became obvious that an efficient implementation on GPU would be a tricky task. It is essentially a recursive algorithm working on a tree structure (scattered memory accesses). Not only that, but the work to be done (per thread) is released in lumps making a SIMT architecture an unoptimal match for it. That's why I took optimization seriously from the get-go. Too seriously I would say, in retrospect.

It took me less than a week to make a reference CPU implementation of the algorithm. Then, I built a "simulator" for the algorithm which kept track of every memory access made, such that I could get signals like: "you read the same unchanged memory location twice" and "first you wrote value A to memory location X, and overwrote it with B moments later without reading it inbetween". Once that was done I started to revise the algorithm as I knew which way I was going every time I changed something.

After 15 or so exhaustive revisions I was happy with the way the algorithm was performing on the CPU: I was pretty sure it did almost no extra work, but not too many clock cycles were spent to guarantee that. It incorporated somewhat obfuscated bookkeeping for the lazy tree changes, but they were implemented through fast operations.

Then I ported the algorithm to CUDA and continued optimizations on the target hardware. After a while I was at a point where most of my optimizations actually set the performance back, so I thought I had passed the point where optimizations were no longer worth the effort. In hindsight, I had passed that point long ago and was borderline neurotic about the performance. While it took me less than a week to implement the initial version of the algorithm, I spent almost half a year performance optimizing it (not entirely full-time, though). And all that work doesn't even show up in the paper! Well, some of it does in Algorithm 2, but it's so obfuscated with all the overlapping optimizations that even I don't remember how it works anymore without taking a look at my notes :-) Don't worry though, I ran an extensive test suite on the algorithm after each optimization stage so as to make sure it still produces correct results.

Now that it is all behind me I can say I spent too much time on the implementation because that's not what the paper is about. On the other hand I did acquire some new skills in porting tricky algorithms for GPGPU.